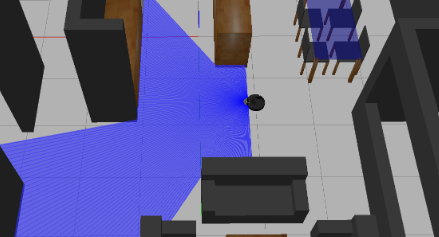

Vacuum Cleaner

Introduction. You have probably heard of Roomba and other house cleaning robots. Well, for this exercise we are going to implement the algorithm of a vacuum-cleaning robot. The aim is to clean as much surface of the house as possible, time not being a restriction. Our robot is equipped with 180 lasers displayed in a semicircle at its front that will let it sense when it has reached near a wall. This way it can avoid collision. Laser measurement processing. As we've seen in the previous exercise, lasers output a single number that represents the distance to the first obstacle in that direction. This information is key for sensing our surroundings. Nonetheless, we won't be using this raw data just like that – there's some math we must do –. First of all, we will translate these numbers to polar coordinates. We know there are 180 lasers displayed in a 180-degree semicircle, so we know at which angle each laser is pointing. With this information we can build a polar...